1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

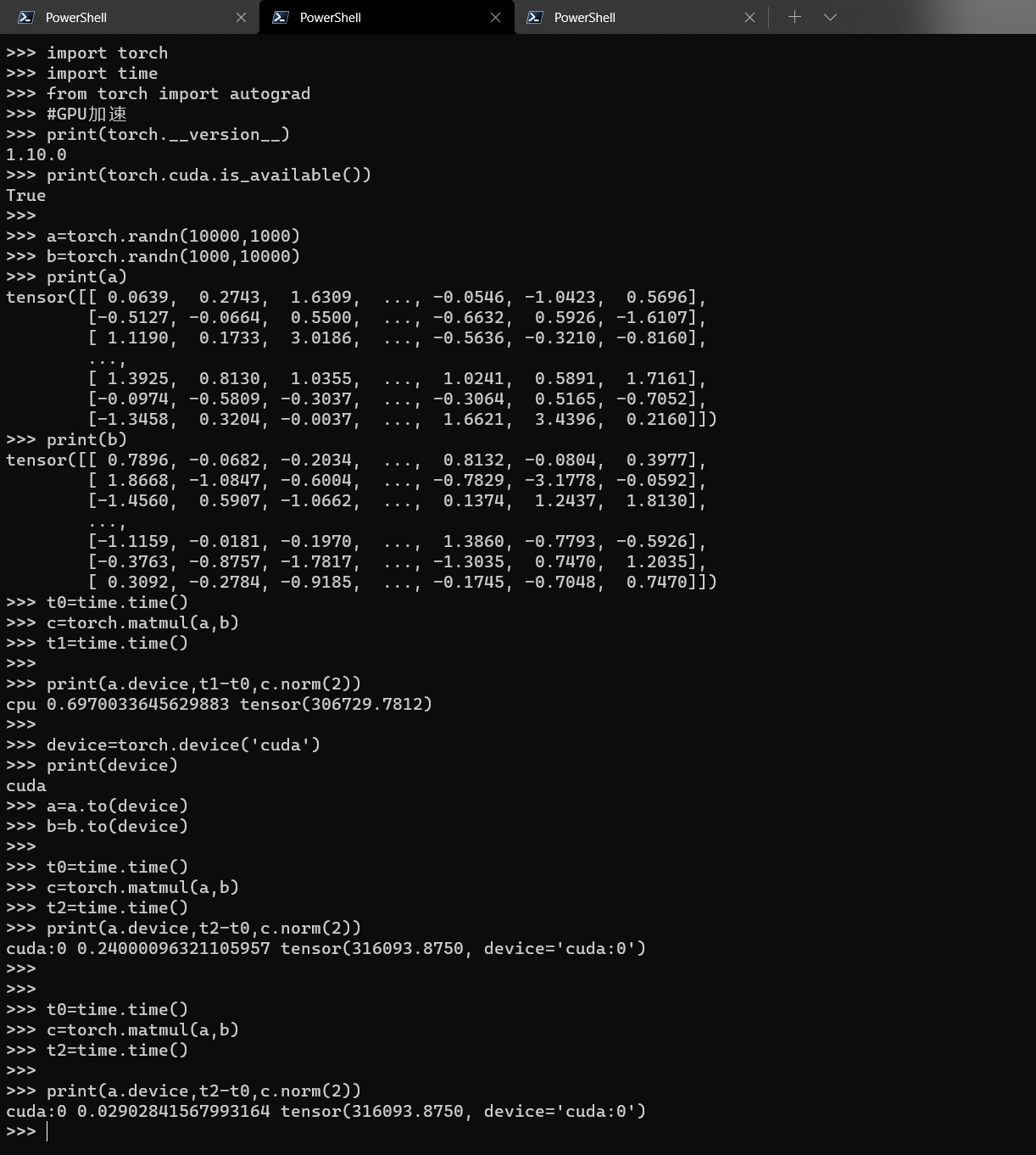

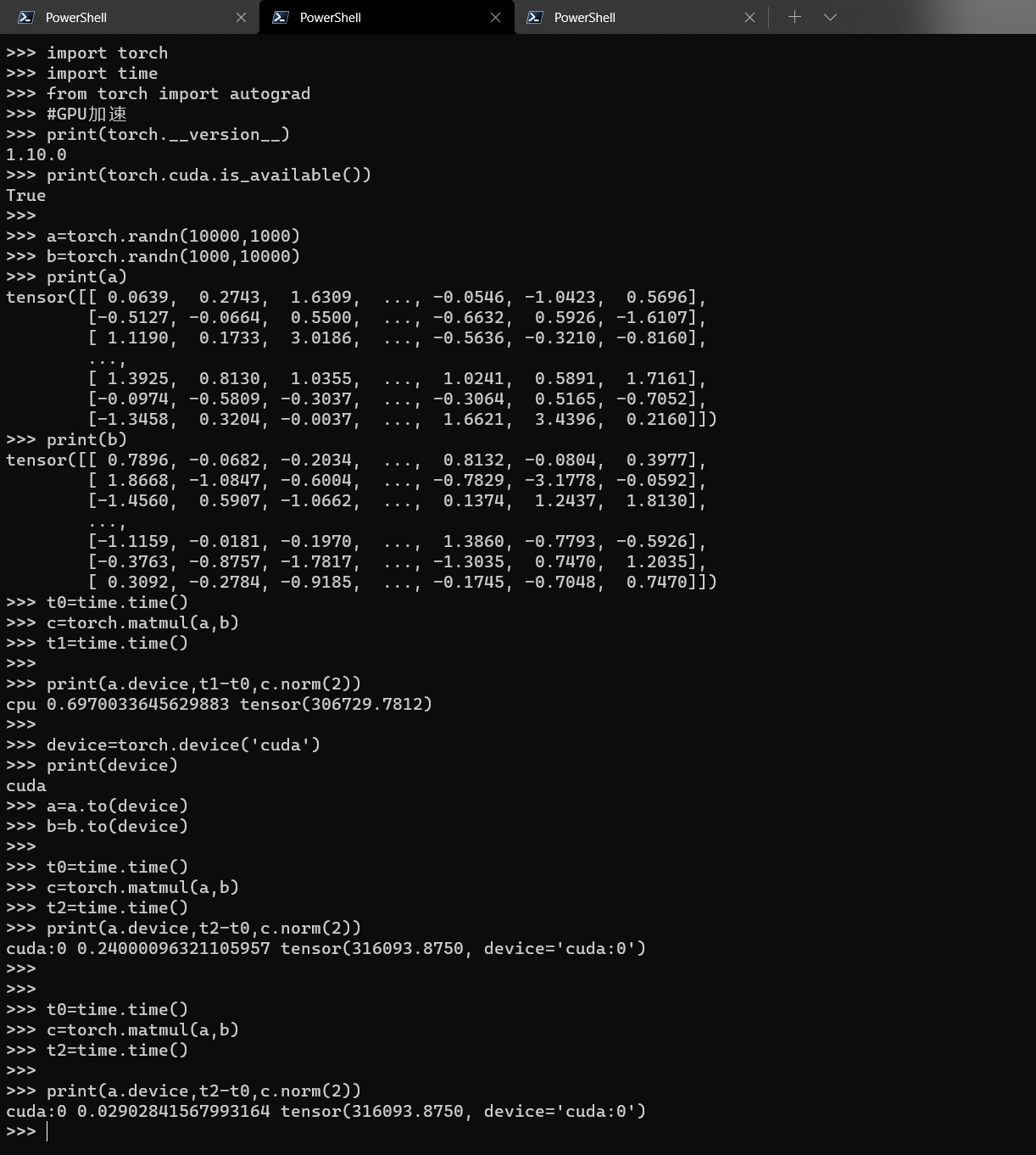

| import torch

import time

from torch import autograd

print(torch.__version__)

print(torch.cuda.is_available())

a=torch.randn(10000,1000)

b=torch.randn(1000,10000)

print(a)

print(b)

t0=time.time()

c=torch.matmul(a,b)

t1=time.time()

print(a.device,t1-t0,c.norm(2))

device=torch.device('cuda')

print(device)

a=a.to(device)

b=b.to(device)

t0=time.time()

c=torch.matmul(a,b)

t2=time.time()

print(a.device,t2-t0,c.norm(2))

t0=time.time()

c=torch.matmul(a,b)

t2=time.time()

print(a.device,t2-t0,c.norm(2))

|